The winding journey of fiber optics is a story of persistent progress. From Daniel Colladon’s 1841 demonstration of light guidance in water to recent advances empowering multi-terabit infrastructure, researchers continuously pushed the boundaries of optical communication.

Early steps like total internal reflection concepts and the first glass fibers set the stage. Later came lasers, amplifiers, and sophisticated multiplexing—each breakthrough building capacity until today’s global networks transit unspeakable data via nearly imperceptible strands of glass.

While challenges remain, fiber’s future as the long-haul backbone appears assured, thanks to ongoing innovation. Tracing the key milestones in fiber optics provides insight into this transformative technology.

Table of Contents

1790 – Optical Telegraph

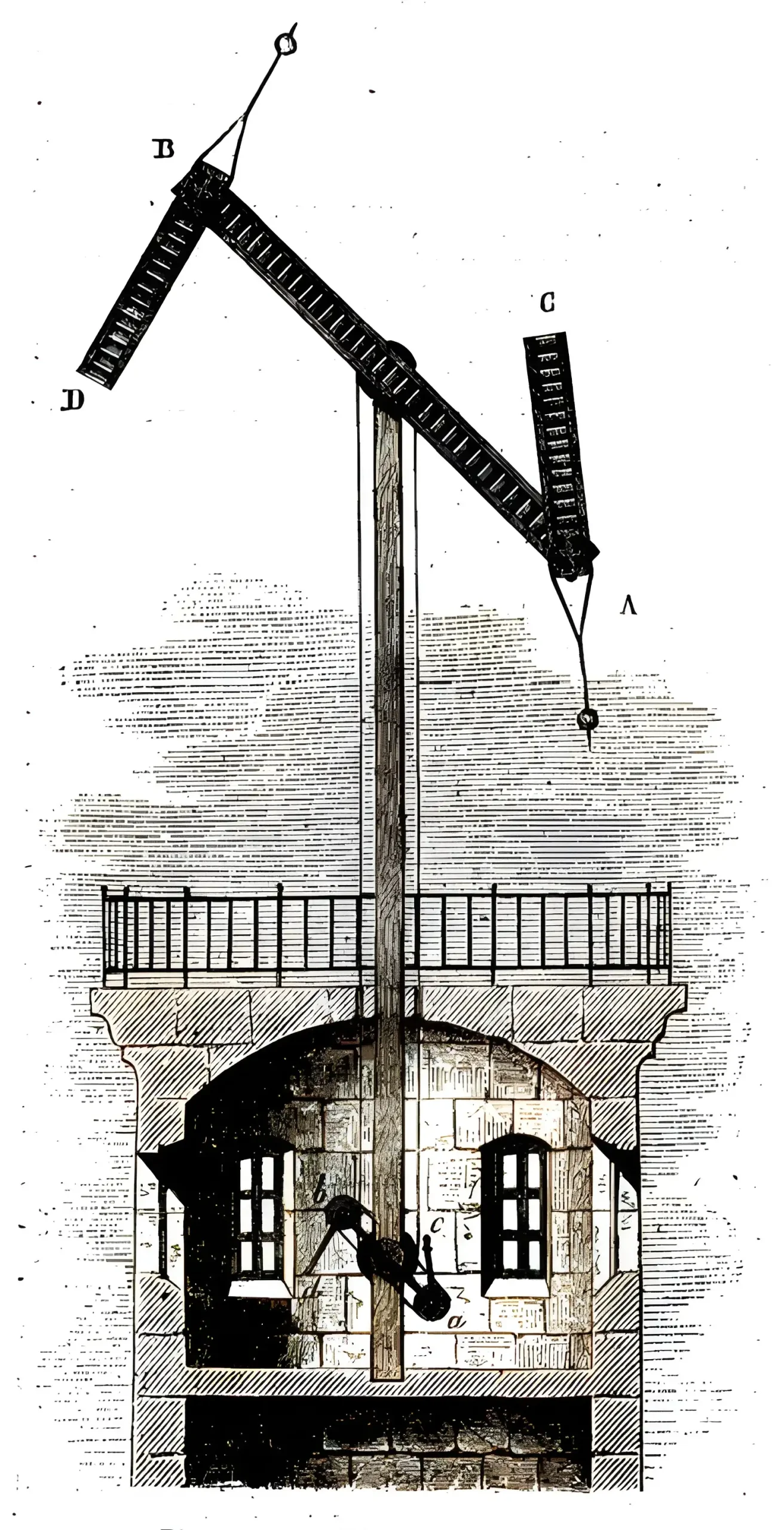

The optical telegraph, invented by Claude Chappe in 1790, was the first practical telecommunications system using optical technology. It comprised a series of towers spaced 10-30 km apart, with movable semaphore arms on top that could be oriented at various angles to signify different letters and numbers.

An operator in each tower would observe the semaphore arm positions using a telescope and decode the message, then retransmit it to the next tower. This allowed visual transmission of information at 2-3 words per minute across long distances.

The first optical telegraph line was set up between Paris and Lille in 1794, spanning 193 km with 22 stations. Soon, the network expanded to cover much of France and parts of Europe.

Though extremely slow by modern standards, the optical telegraph marked a huge leap over-reliance on the physical transportation of messages. The optical telegraph remained in use for over 60 years until electric telegraph systems became operative in the 1850s.

Some key facts about the optical telegraph:

- Invented by Claude Chappe in France in 1790

- Semaphore arms on towers spaced 10-30 km apart

- Arm angles encoded letters and numbers

- Transmission rate of 2-3 words per minute

- The first line between Paris and Lille spanned 193 km.

- Remained in use for over 60 years until electric telegraphs emerged

1841 – Total Internal Reflection

The principle of total internal reflection was first demonstrated for light in 1841 by Daniel Colladon, a Swiss physicist. He directed light from an arc lamp into a stream of water while observing the light’s path. Colladon showed that light could be guided through a curved trajectory within the water jet by undergoing total internal reflection at the water-air boundary with minimal losses.

This experiment by Colladon established that light could be transmitted through media like water and glass fibers using successive total internal reflections, forming the basis for modern fiber optics.

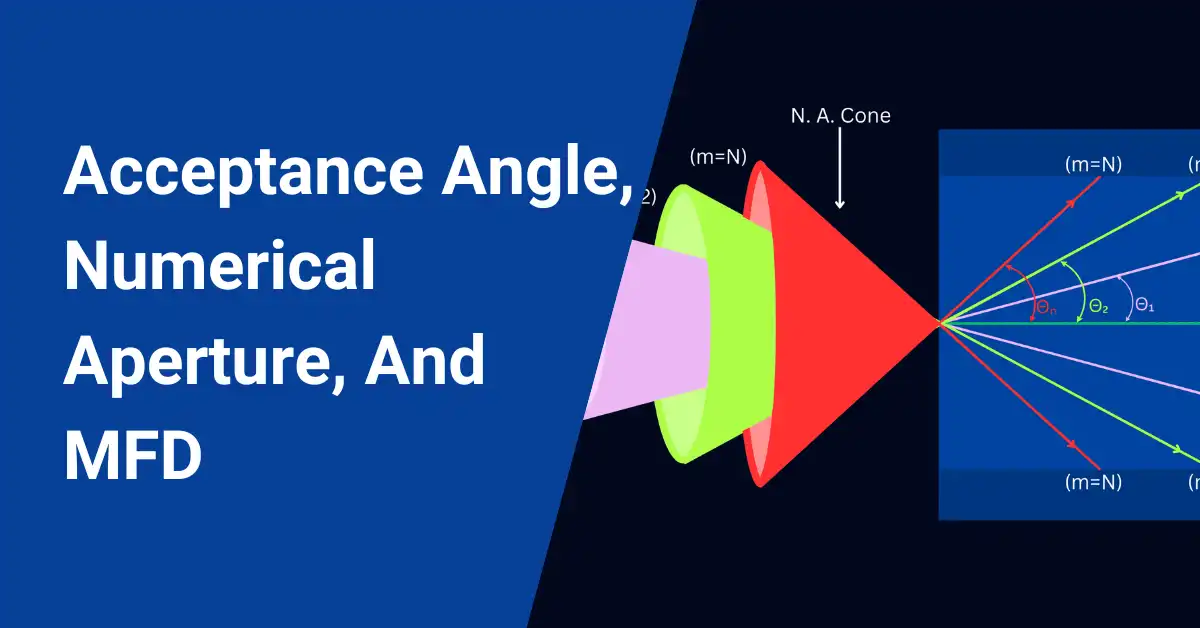

For total internal reflection to occur, the refractive index of the medium must be greater than surrounding materials. For instance, the core has a higher refractive index in a glass fiber than the cladding, causing reflection at the boundary and confining light transmission through the core.

Colladon’s observations also implied improvement in illumination systems, with light directed from a source through reflecting tubes and refractors to provide lighting.

Contemporaneous designers used the principle to illuminate lighthouses and streetlights more efficiently. Colladon’s pioneering work with total internal reflection proved the possibility of guided light transmission, paving the path for later developments in optical waveguides.

Key facts on total internal reflection:

- Demonstrated by Daniel Colladon in 1841 using a water jet

- Showed light can follow a curved trajectory through repeated reflections

- Occurs when light encounters a boundary to a material of lower refractive index

- Allowed development of improved illumination systems at the time

- Established the possibility of transmitting light through optical waveguides

1880 – Photophone

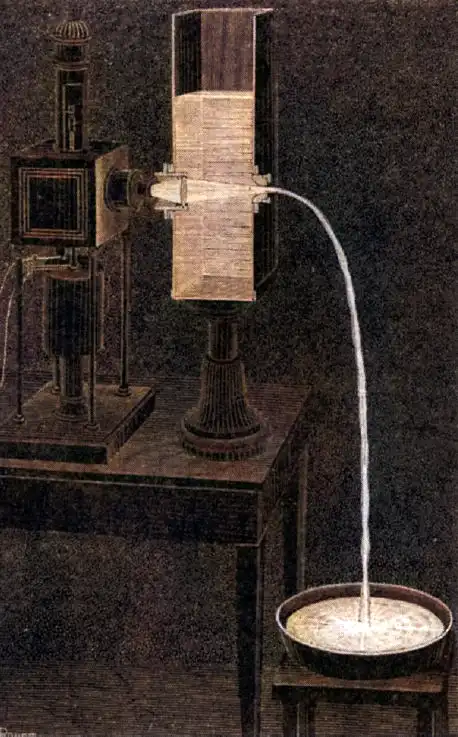

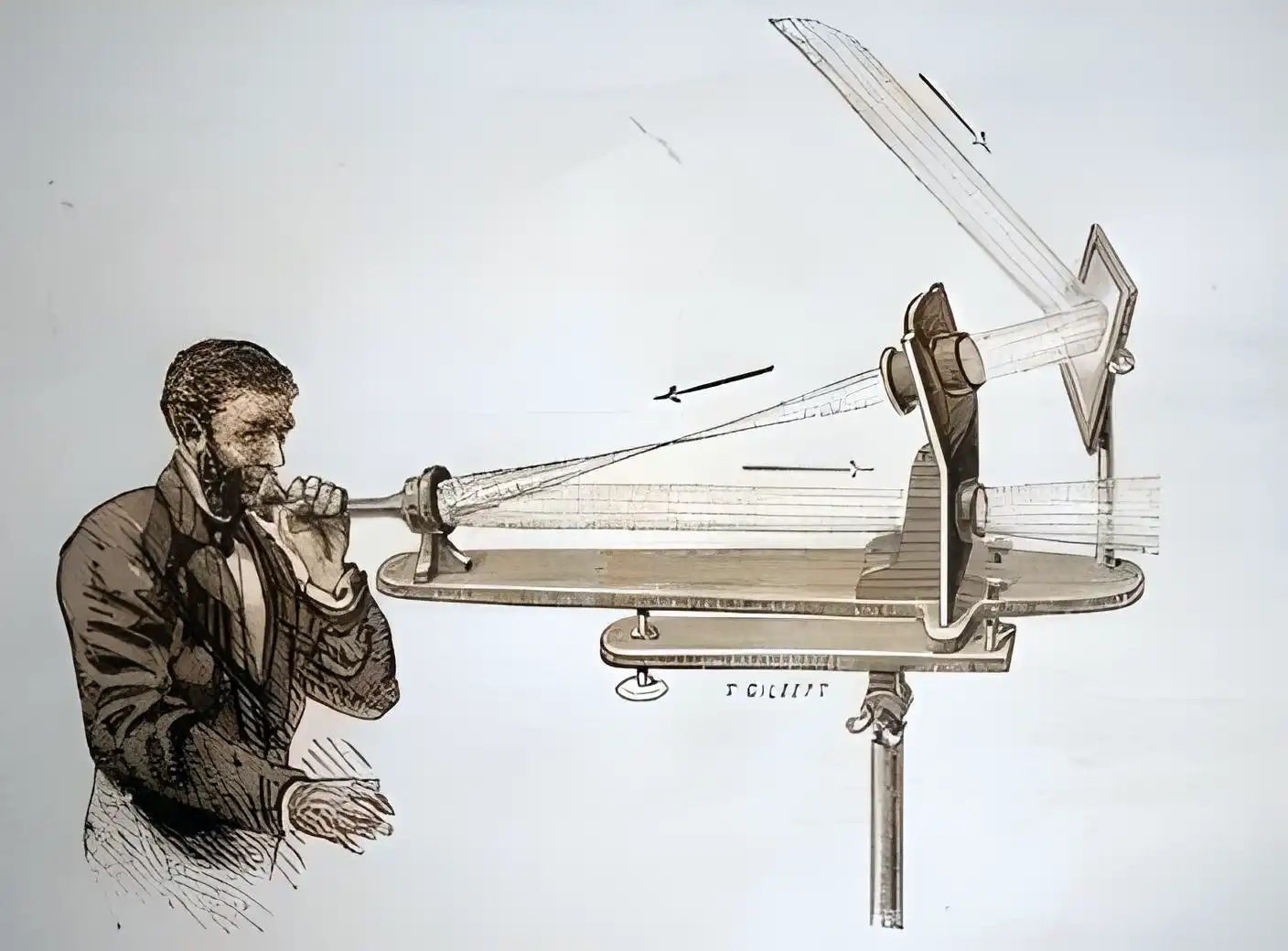

Alexander Graham Bell invented an early wireless voice communication device called the photophone in 1880, just 4 years after patenting the telephone. It transmitted sound over a beam of light rather than electric signals.

Bell designed a transmitter consisting of a flexible mirror attached to a mouthpiece. The mirror vibrated as the user spoke into the mouthpiece to modulate the sunlight reflecting off it. This modulated light was directed across open space to a receiver unit, which consisted of a parabolic mirror focused on a selenium cell.

The receiver converted light variations back to electrical signals through the selenium cell, which could be heard through a telephone headset.

The photophone could transmit intelligible speech wirelessly over 200 meters. But its practical use was limited – it required sunlight, worked only in line-of-sight, and could be obstructed by bad weather.

Nonetheless, it was an important milestone in optical communications. Bell regarded the photophone as his most important invention, believing the future lies in transmitting intelligence by light.

Key aspects of the photophone:

- Invented by Alexander Graham Bell in 1880

- Transmitted sound by modulating a light beam

- Used a flexible mirror at the transmitter vibrating in response to speech

- Photocell at the receiver converted light variations back into sound.

- Could transmit understandable voice over 200 meters

- Hindered by the need for sunlight and line-of-sight alignment

1948 – Shannon Communication Model

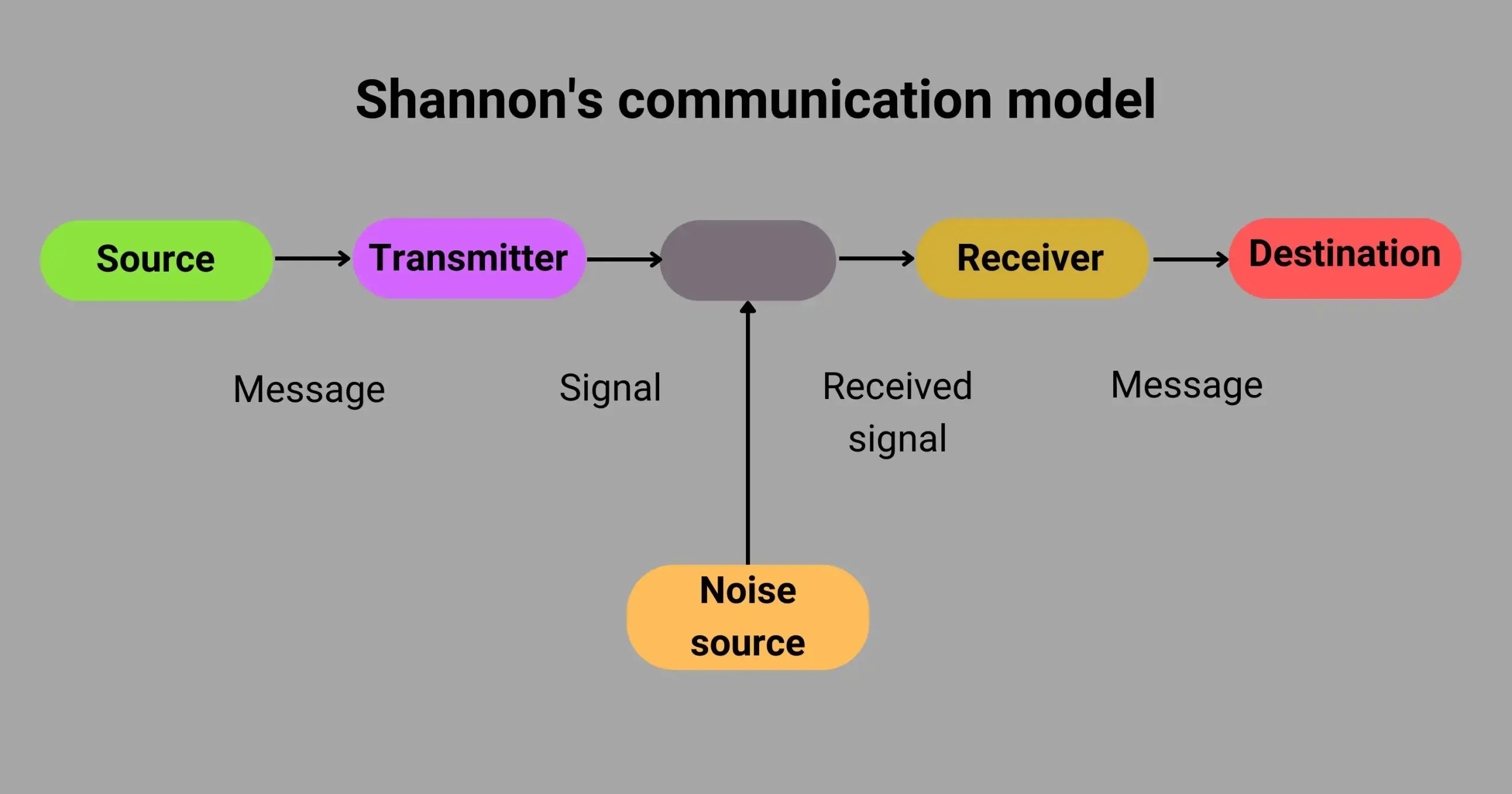

Claude Shannon, known as the “father of information theory,” published a landmark paper in 1948 that provided the theoretical foundation for modern telecommunications. Shannon’s paper “A Mathematical Theory of Communication” introduced a general model depicting the key elements in a communications system.

As per Shannon’s model, the information source generates a message to be communicated, which the transmitter converts into signals for transmission through a channel or medium.

In the channel, noise from external disturbances corrupts the signal. The receiver then reconstructs the message from the received signal and passes it on to the destination. Shannon analyzed each component mathematically, quantifying parameters like channel capacity for reliable communication.

Shannon’s work established fundamental limits on signal processing and coding techniques to achieve efficient and error-free telecommunications.

His research on information theory profoundly influenced the digital revolution in transmitting all types of data. Concepts proposed by Shannon, like source coding and channel coding, are ubiquitous in today’s communication systems and data compression algorithms.

Major aspects of Shannon’s communication model:

- Published in 1948 paper “A Mathematical Theory of Communication”

- The established theoretical basis for modern telecommunications

- Components: information source, transmitter, channel, noise, receiver, destination

- Quantified communication channel capacity limits

- Concepts like source/channel coding are pivotal in digital data transmission.

1957 – First Glass-Clad Fiber

The first fiber optic strand with a glass core and cladding was developed in 1957 by Lawrence Curtiss, an American physicist. Earlier fibers used plastic cladding, which degraded over time and limited light transmission.

Curtiss’s innovation was fabricating the fiber by inserting a rod of glass with a high refractive index into a tube of glass with a lower refractive index. This assembly was heated and drawn out into a fiber, with the rod forming the core and the tube forming the cladding.

The refractive index difference enabled total internal reflection within the glass fiber core, allowing light propagation even through winding paths.

Initially, Curtiss coated the core-clad glass fiber with an opaque plastic jacket to demonstrate light conduction. The first bare glass-clad fibers had losses of 1000 dB/km, which was quickly improved to below 20 dB/km.

Curtiss’s glass-clad fiber paved the way for the low-loss fibers emerging in the 1960s, enabling practical optical communications.

Key details on the first glass-clad fiber:

- Developed by Lawrence Curtiss in 1957

- Fabricated by rod-in-tube method

- Glass core with high refractive index, glass cladding with lower refractive index

- Refractive index difference enabled total internal reflection

- Initial versions had a plastic jacket or losses of ~1000 dB/km.

- Led to the later development of low-loss glass fibers

1958 – Invention of the Laser

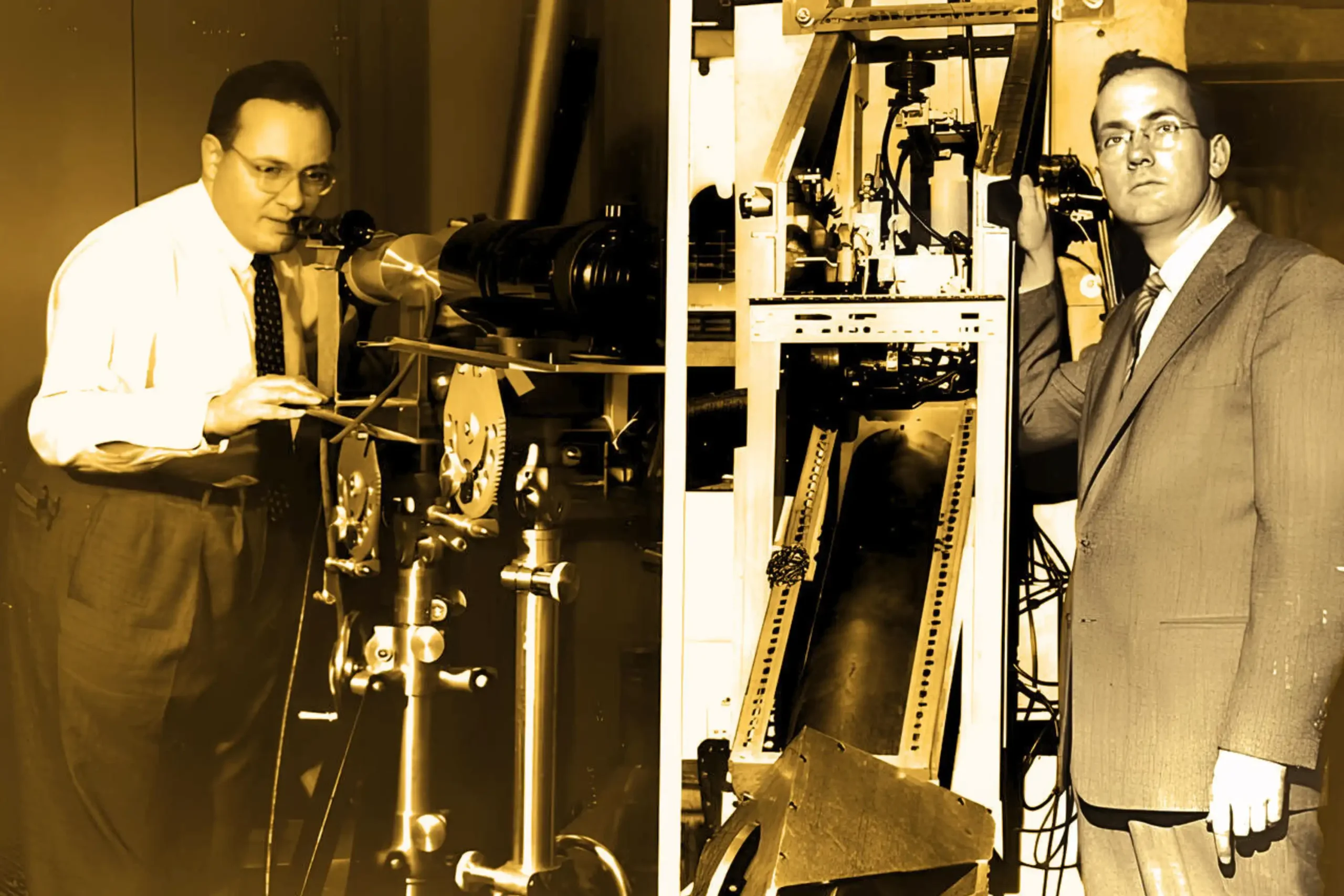

The laser was conceptualized in 1958 by Charles Townes and Arthur Schawlow, building on Townes’ previous work on the maser. They published a seminal paper outlining the principle of the optical maser, which used stimulated emission to amplify and generate an intense beam of coherent light.

Townes and Schawlow described how a Fabry–Pérot cavity with mirrored ends could be used to amplify stimulated emission from excited matter. They proposed amplifying the medium using gas, liquid, or solid-state materials.

Although no working laser was built then, this theoretical formulation paved the way for Theodore Maiman’s realization of the first laser just two years later.

The laser has become indispensable for fiber optic communications and diverse applications like materials processing, medicine, displays, sensing, and more.

Townes, Schawlow, and Nikolay Basov were jointly awarded the 1964 Nobel Prize in Physics for their fundamental work on quantum electronics that led to the laser. The concepts they defined – stimulated emission, cavity feedback, coherent light output – remain central to laser operation and enabled an astounding revolution in optics.

Highlights of the first laser theory:

- Proposed by Charles Townes and Arthur Schawlow in 1958

- Built on Townes’ earlier maser research (microwave amplification)

- Outlined using stimulated emission to amplify light in an optical cavity

- Established principles for generating an intense coherent beam

- Envisioned possible solid, liquid, or gas-based systems

- Led to the first actual laser demonstration by Maiman in 1960

- Townes, Schawlow, and Basov received the 1964 Nobel Prize for this work.

1960 – First Laser

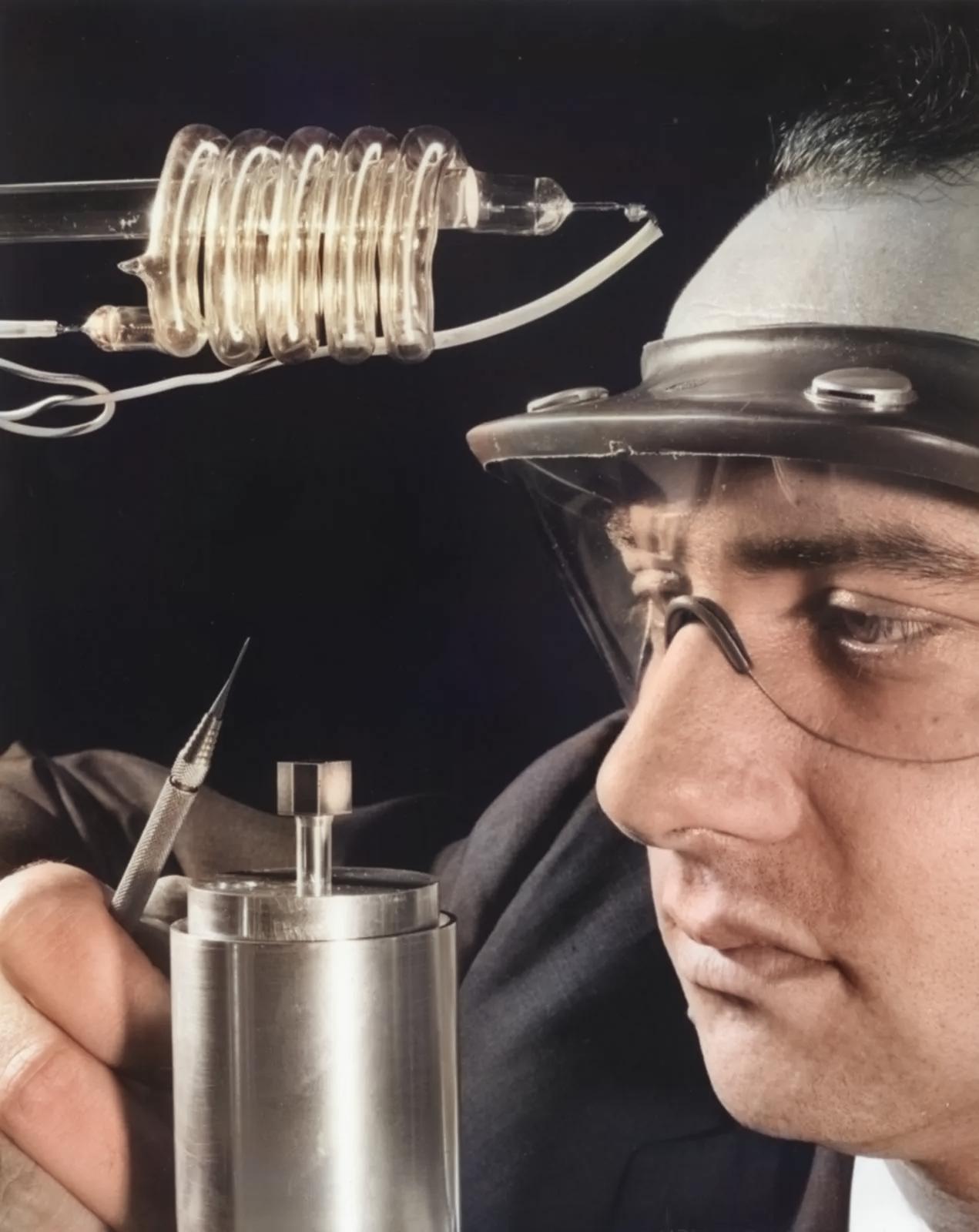

The world’s first functioning laser was demonstrated by Theodore Maiman in 1960 at Hughes Research Laboratories. It was a pulsed ruby laser based on a pink ruby rod with silver-coated mirrors at the ends. Maiman’s laser produced a pulse of coherent red light at 694.3 nm wavelength, with a beam diameter of ~1cm and a pulse duration of a millisecond.

To pump the laser, Maiman used a spiral xenon flash lamp wrapped around the ruby rod. The intense flash excited Cr+3 ions in the ruby into higher energy levels.

As they decayed back down, stimulated emission generated an amplified, highly focused beam of light. Maiman’s successful demonstration of the ruby laser just two years after it was theorized proved the principles of laser operation defined by Townes and Schawlow.

This first laser fired pulses of a few joules, but within months, researchers were generating megajoule bursts. Its coherent, monochromatic, and directional beam opened up new applications like laser surgery, cutting, alignment, ranging, and more.

Maiman’s pioneering accomplishment sparked intense research that produced better lasers like the helium-neon laser by 1961. It paved the way for diverse laser applications that have become ubiquitous today.

Key facts about the first laser:

- Demonstrated in 1960 by Theodore Maiman at Hughes Research Labs

- It was a pulsed ruby laser with silver-coated mirrored ends

- Produced pulses of coherent red light at 694.3 nm

- Pumped by a xenon flash lamp surrounding the ruby rod

- Proved principles of laser operation using stimulated emission

- Fired bursts of light energy in the joule range initially

- Led to rapid improvements in laser power and applications

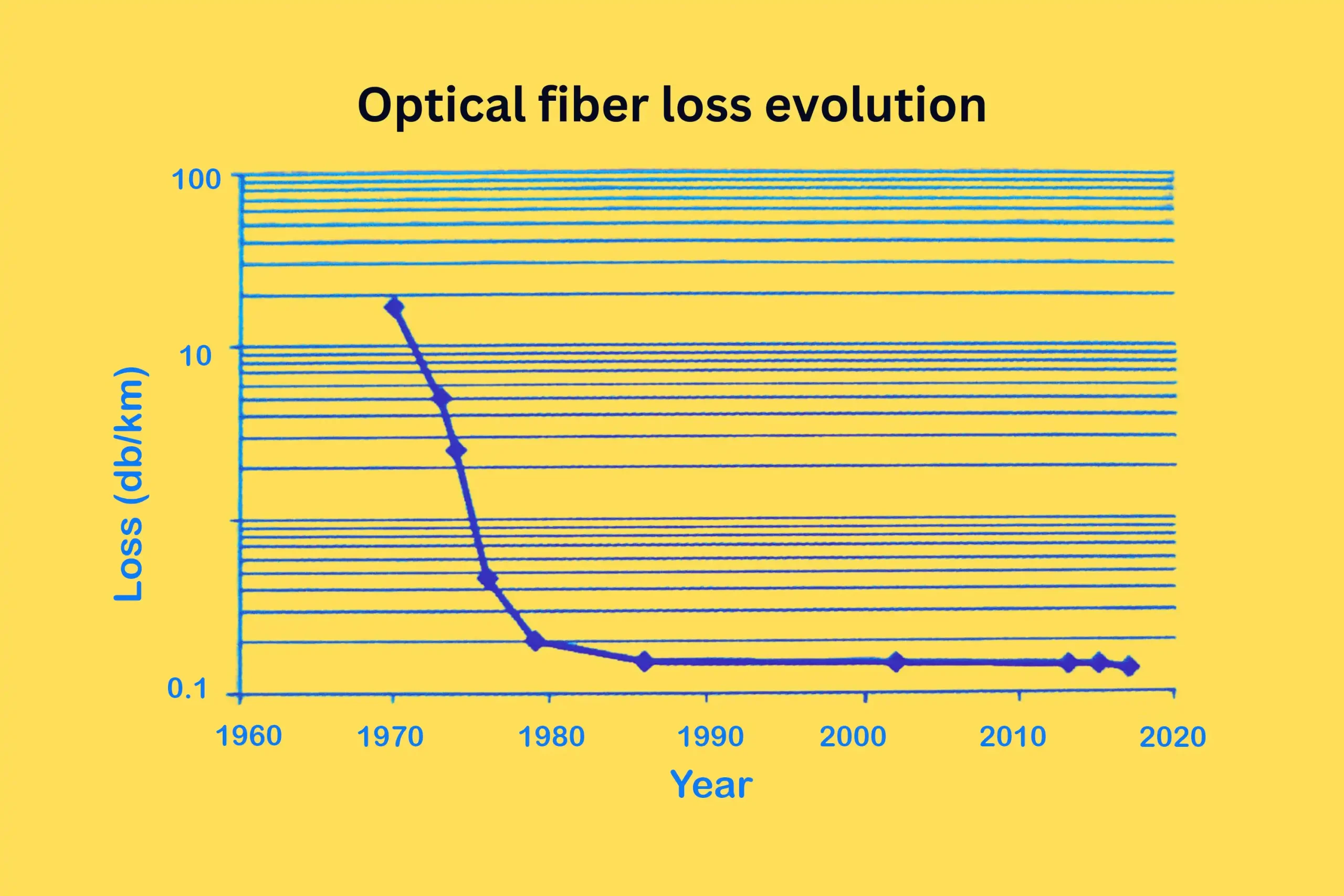

1970 – Single-Mode Fiber

The first single-mode optical fiber was developed by researchers Robert Maurer, Donald Keck, and Peter Schultz at Corning Glass Works in 1970. By lowering the fiber core diameter and optimizing the refractive index difference between the core and cladding, they achieved single-mode transmission for the first time.

Earlier multimode fibers exhibited modal dispersion, causing signal distortion over distance. In a single-mode fiber, only the fundamental mode can propagate, eliminating dispersion. Corning’s fiber had losses of just 16 dB/km at 633 nm wavelength, ten times lower than conventional fibers then.

This breakthrough was achieved by doping the fused silica core with titanium to lower its refractive index relative to the cladding, enabling a smaller core diameter. The researchers started drawing fibers with different dopant concentrations to optimize characteristics. The resulting single-mode fiber had a core diameter of ~10 microns.

The single-mode fiber revolutionized telecommunications by enabling kilometers of distortion-free signal transmission. Maurer, Keck, and Schultz were awarded the 2009 National Medal of Technology for their pioneering accomplishment. Their innovations propelled the digital information age.

Significance of the first single-mode fiber:

- Developed at Corning Glass Works by Maurer, Keck, and Schultz in 1970

- Lowered core diameter and increased core-cladding refractive index difference

- Permitted only one propagation mode to eliminate modal dispersion

- Titanium doping of fused silica core reduced its refractive index

- Achieved loss of just 16 dB/km, one-tenth of conventional fiber

- Core diameter of around 10 microns enabled single-mode behavior

- Enabled low-distortion transmission over long distances

1970 – Continuous Wave Room Temperature Laser

Continuous wave laser operation at room temperature became possible in 1970 through the work of Bell Labs and Ioffe Institute researchers. This eliminated the need for laser cooling and enabled compact semiconductor diode lasers.

In early May, Zhores Alferov’s research group in the Soviet Union (Russia) demonstrated room-temperature pulsed lasing in structures with heterojunctions of GaAs and related alloys.

This was soon followed on June 1 by the first continuous operation at room temperature achieved by Mort Panish and Izuo Hayashi at Bell Labs, using a GaAs double-heterostructure laser under continuous current injection.

Previously, laser cooling was required for continuous operation. The double heterostructure sandwiching an undoped GaAs active layer between p-type and n-type AlGaAs cladding enabled carrier confinement for population inversion and optical gain at room temperature. This paved the way for diode lasers that could be electrically pumped.

The pioneering work on double heterostructure diodes earned Alferov, Kroemer, and Kilby the 2000 Nobel Prize in Physics. Practical semiconductor diode lasers became ubiquitous for compact optical communication devices like laser pointers, CD/DVD players, and fiber optic links.

Significance of room temperature CW semiconductor lasers:

- First demonstrated in 1970 at Ioffe Institute and Bell Labs.

- Based on GaAs/AlGaAs double heterostructure p-n junctions

- Confined carriers in undoped GaAs layer between p and n cladding

- Allowed continuous lasing at room temperature without cooling

- Eliminated the need for bulky laser setups; enabled miniaturized diode lasers

- Led to many applications like laser pointers, CD/DVD systems, optical fiber links

- The Nobel Prize 2000 was awarded to Alferov, Kroemer, and Kilby for this work.

1977 – Live Traffic through Fiber

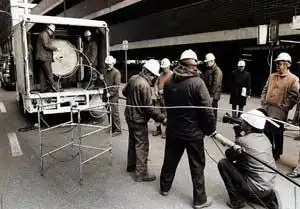

The first telephone call using live fiber optic traffic occurred in 1977 when AT&T installed an experimental fiber optic transmission system in Chicago. This marked fiber optics’ transition from the lab to real-world telecommunication applications.

AT&T’s early system operated at 44.7 Mbps and spanned 1.6 km initially. The multimode fiber had a loss rate of ~20 dB/km and used GaAs semiconductor lasers transmitting at 820 nm wavelength. Repeaters electronically regenerated the signals at intervals.

Within months, capacity was boosted to 90 Mbps. The Chicago fiber network expanded rapidly as operators gained confidence in the reliability of fiber links.

By 1980, AT&T operated nearly 300 km of fiber, carrying over 2 million phone calls daily in Chicago. The success of these early field trials cemented fiber’s central role in long-distance communications.

Other pioneering demonstrations in 1977 included:

- 45 Mbps over a 10 km multimode fiber link by General Telephone and Electronics Corp.

- 6 Mbps over 0.8 km by British Post Office

Key achievements of early fiber systems:

- AT&T installed the first voice traffic fiber link in Chicago in 1977

- Operated at 44.7 Mbps over 1.6 km initially

- Used GaAs lasers and multimode fiber with 20 dB/km loss

- Expanded to 90 Mbps and 300 km length, carrying 2 million calls by 1980

- Other early field demos: 45 Mbps/10 km by GTE, 6 Mbps/0.8 km by British Post Office

- Proved feasibility of fiber optic telecom, paving the way for commercial deployment

1987 – Erbium-Doped Fiber Amplifier

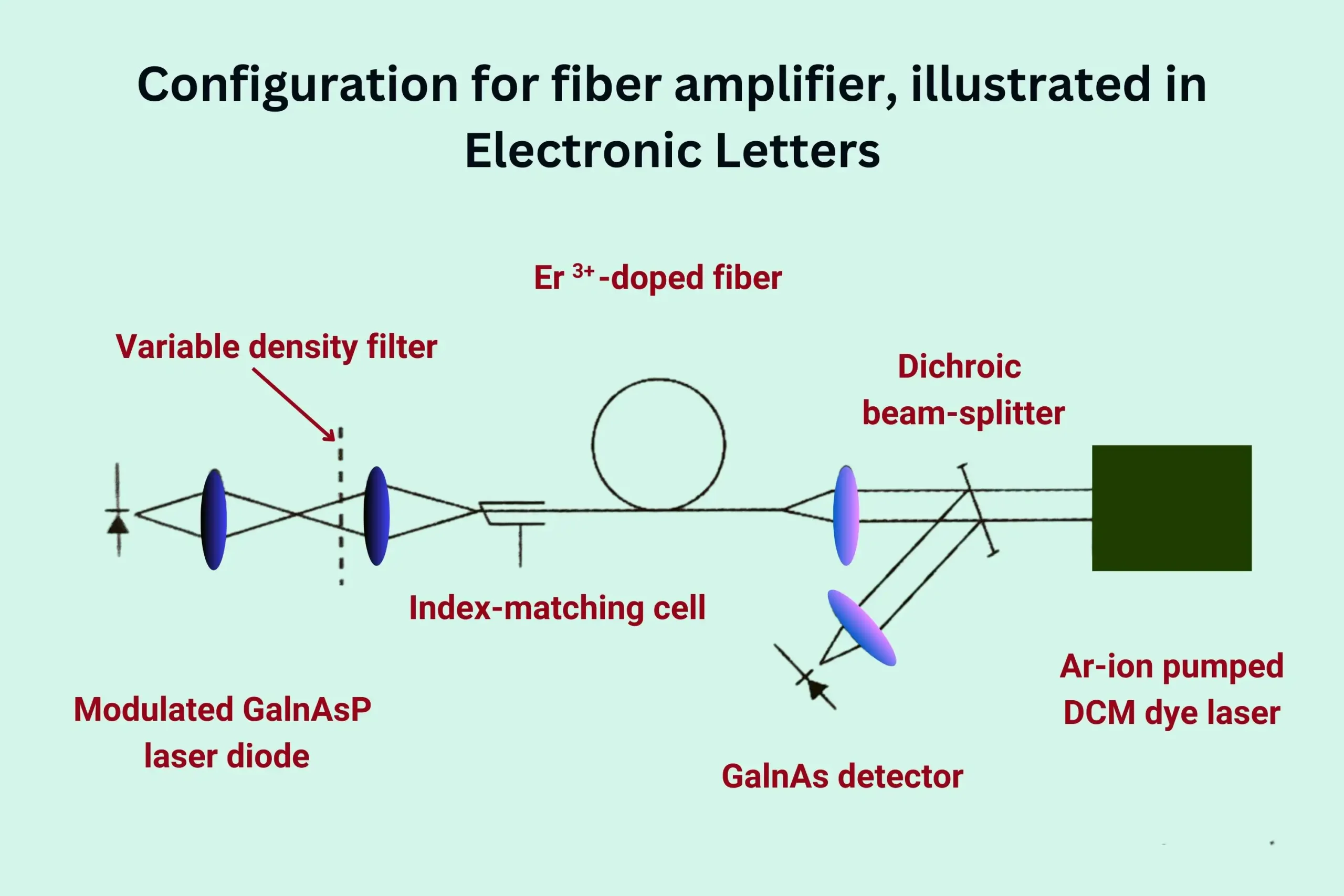

Erbium-doped fiber amplifiers (EDFAs) were first demonstrated in 1987, overcoming the distance limitations of optical fiber networks. EDFAs amplify signals within the fiber itself, eliminating the need for optoelectronic repeaters.

In EDFAs, silica fibers are doped with erbium ions, which can be pumped to a metastable energy level by a 980 or 1480 nm laser. This enables signal amplification at 1550 nm by stimulated emission as erbium ions decay to the ground state.

The first experimental EDFA was built by David Payne at Southampton University. Bell Labs researchers later optimized EDFA performance for wideband amplification.

EDFAs revolutionized fiber networks by amplifying signals directly within the transmission fiber at regular 80-100 km intervals. This overcomes attenuation losses without intermediate conversion to electronics.

EDFAs enabled long-haul fiber links, underwater cables, and dense wavelength division multiplexing. Today’s optical amplifier market exceeds $8 billion, with erbium fibers at the core.

Key facts on erbium-doped fiber amplifiers:

- First demonstrated in 1987 by David Payne at Southampton University.

- Silica fiber doped with erbium ions that can be optically pumped

- Provides gain from 1530 to 1560 nm band by stimulated emission

- Amplifies signal directly within fiber by interaction with the pump laser.

- Overcame the need for optoelectronic repeaters for long-distance networks

- Enabled undersea cables, extended fiber links, dense WDM, etc

- EDFA market size today exceeds $8 billion

1996 – Wavelength Division Multiplexing

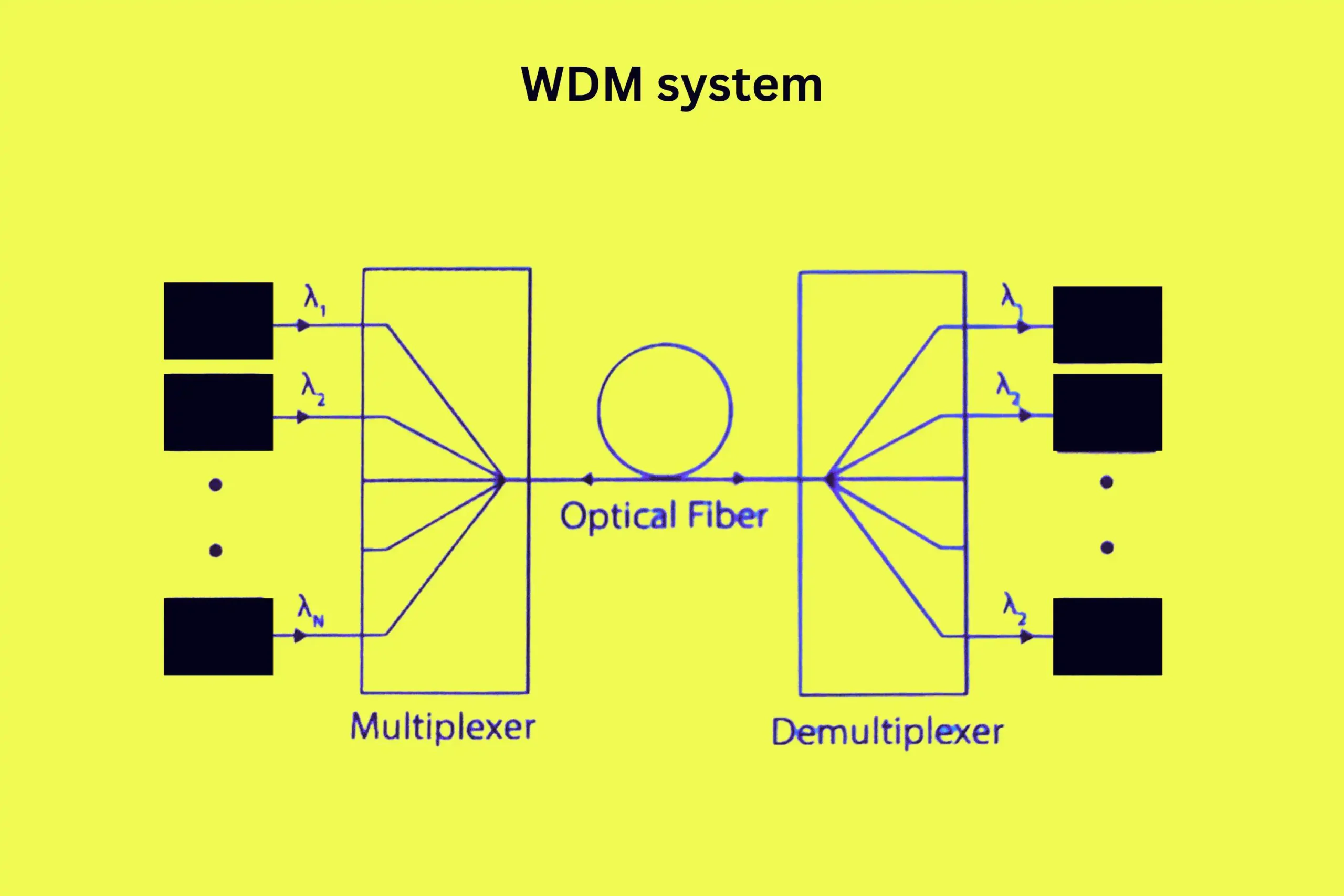

Wavelength division multiplexing (WDM) technology was first commercially deployed in 1996 on point-to-point long-haul fiber links, increasing capacity dramatically. WDM allows the transmission of multiple signals through a single optical fiber simultaneously by using different wavelengths.

In early WDM systems, up to 16 channels could be multiplexed, each modulated with a 2.5 Gbps data rate. The channels were spaced 100 GHz apart (0.8 nm) in the 1530 to 1560 nm low-loss fiber band. Demultiplexers with filters separated the wavelengths at the receiver. Total capacity exceeded 40 Gbps on one fiber.

By 1999, commercial WDM systems achieved over 100 channels spaced 50 GHz apart, totaling bandwidths up to 3.2 Tbps per fiber. Tunable lasers enabled closer wavelength packing.

The parallel transmission enabled by WDM led to exponential traffic growth in backbones. Further innovations like dense WDM and amplified WDM extended capacities even higher.

Key achievements of early wavelength division multiplexing:

- Commercially deployed in 1996 on point-to-point fiber links

- Allowed transmission of multiple signals over one fiber

- Up to 16 channels initially, each at 2.5 Gbps data rate

- 100 GHz spacing between wavelengths from 1530 to 1560 nm

- Total capacity over 40 Gbps on one fiber

- By 1999, it exceeded 100 channels and 3.2 Tbps capacity

- Enabled exponential traffic growth on fiber backbones

2002 – Differential Phase Shift Keying

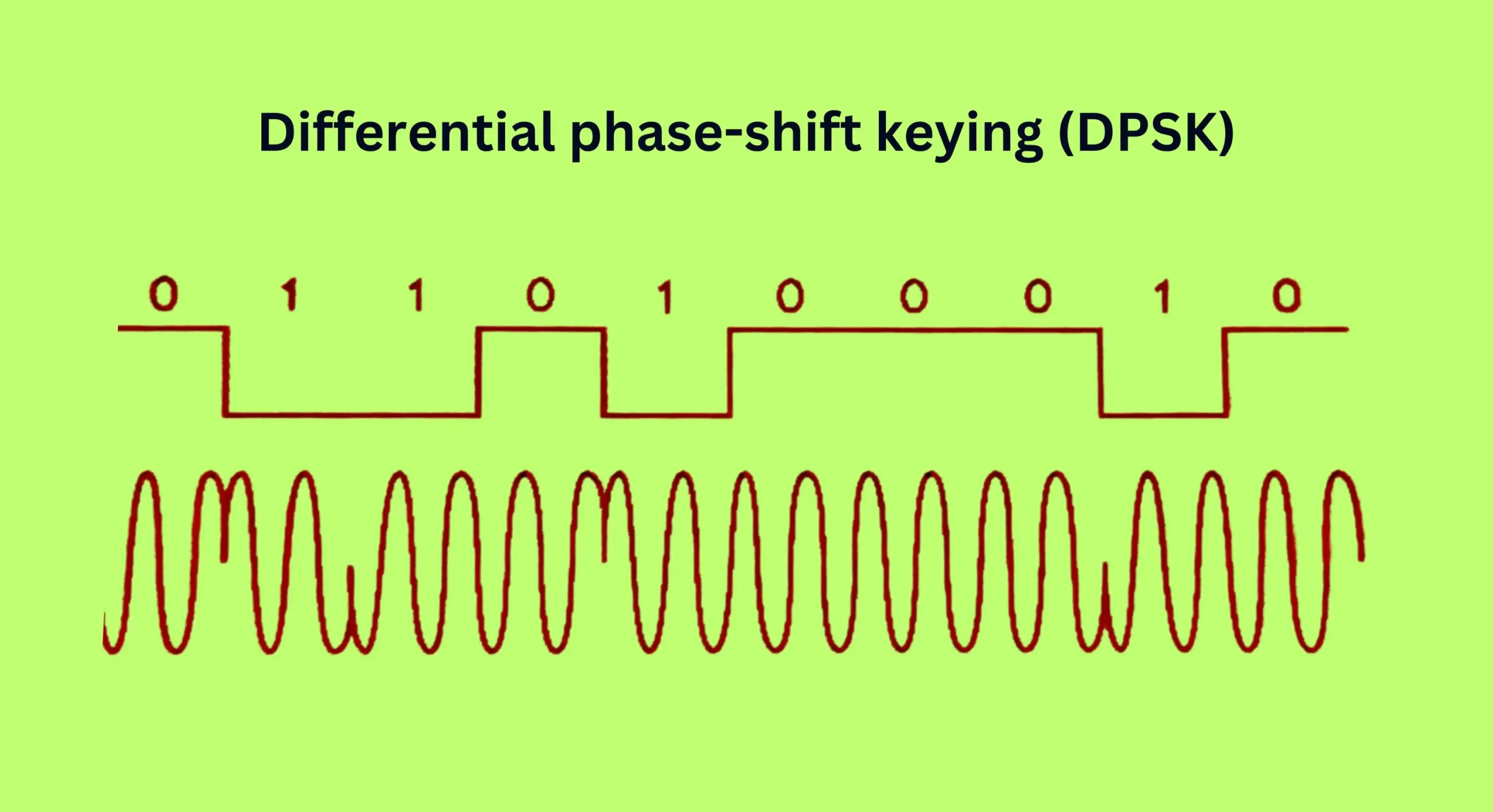

Differential phase-shift keying (DPSK) for long-haul 40 Gbps fiber optic transmission was first demonstrated in 2002 by researchers at Bell Labs, improving sensitivity by 3 dB compared to conventional on-off keying.

In DPSK, data is encoded in the phase change between adjacent bits rather than amplitude. The phase shift represents 1-bit changes while 0-bits produce no change.

At the receiver, a delay line interferometer compares the phase of each bit to the previous bit. Constructive or destructive interference outputs the data.

DPSK is more tolerant to amplifier noise and fiber nonlinearities than on-off keying since only phase change is decoded. The initial experiment transmitted error-free over 4000 km at 40 Gbps.

DPSK increased spectral efficiency, enabling closer channel spacing and higher capacities. Variants like DQPSK allowed 100 Gbps systems.

Key aspects of differential phase shift keying in fiber optic networks:

- First demonstrated for long-haul 40 Gbps transmission in 2002 by Bell Labs

- Data is encoded as the phase shift between bits rather than amplitude.

- 1-bit causes phase change, 0-bit causes no change

- Decoded by interferometer comparing each bit’s phase to the previous

- Higher noise tolerance than on-off keying as only phase decoded

- Enabled 40 Gbps over 4000 km initially

- Improved spectral efficiency for higher fiber capacities

- Led to DQPSK for 100 Gbps networks

2003 – G-PON

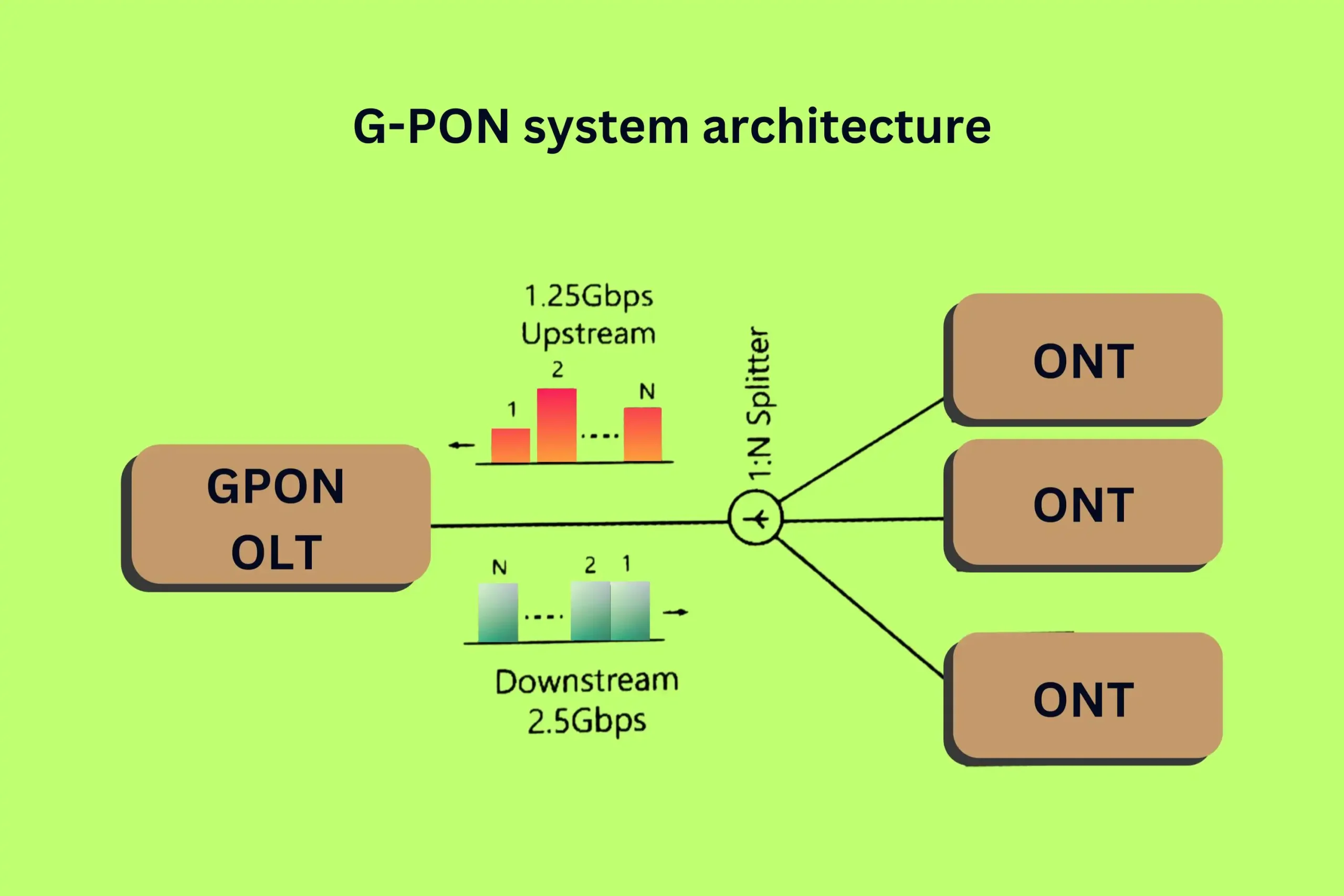

The gigabit-capable passive optical network (G-PON) standard was ratified by the International Telecommunication Union in 2003, enabling cost-effective fiber-to-the-premises deployment.

G-PON uses a passive optical distribution network without active components. A single feeder fiber from the provider hub splits to 32-128 customer premises, enabled by passive splitters. Data rates up to 2.5 Gbps downstream and 1.2 Gbps upstream are supported.

G-PON delivered the economies of scale needed for widespread residential fiber roll-out. Asia led early adoption, with over 40 million subscribers in China alone by 2014.

The upgraded XG-PON standard later boosted speeds to 10 Gbps down and 2.5 Gbps up. PON networks now surpass half a billion users globally, promising a fiber-connected world.

Key highlights of G-PON standard:

- Ratified by ITU in 2003

- Enables fiber-to-the-premises access networks

- Passive splitters divide single provider fiber to 32-128 users

- Supports 2.5 Gbps downstream, 1.2 Gbps upstream

- Drives fiber broadband growth by lowering rollout costs

- Over 40 million users in China by 2014

- Evolved into XG-PON with 10 Gbps down, 2.5 Gbps up

- Total PON subscriptions now exceed 500 million worldwide

2010 – XG-PON

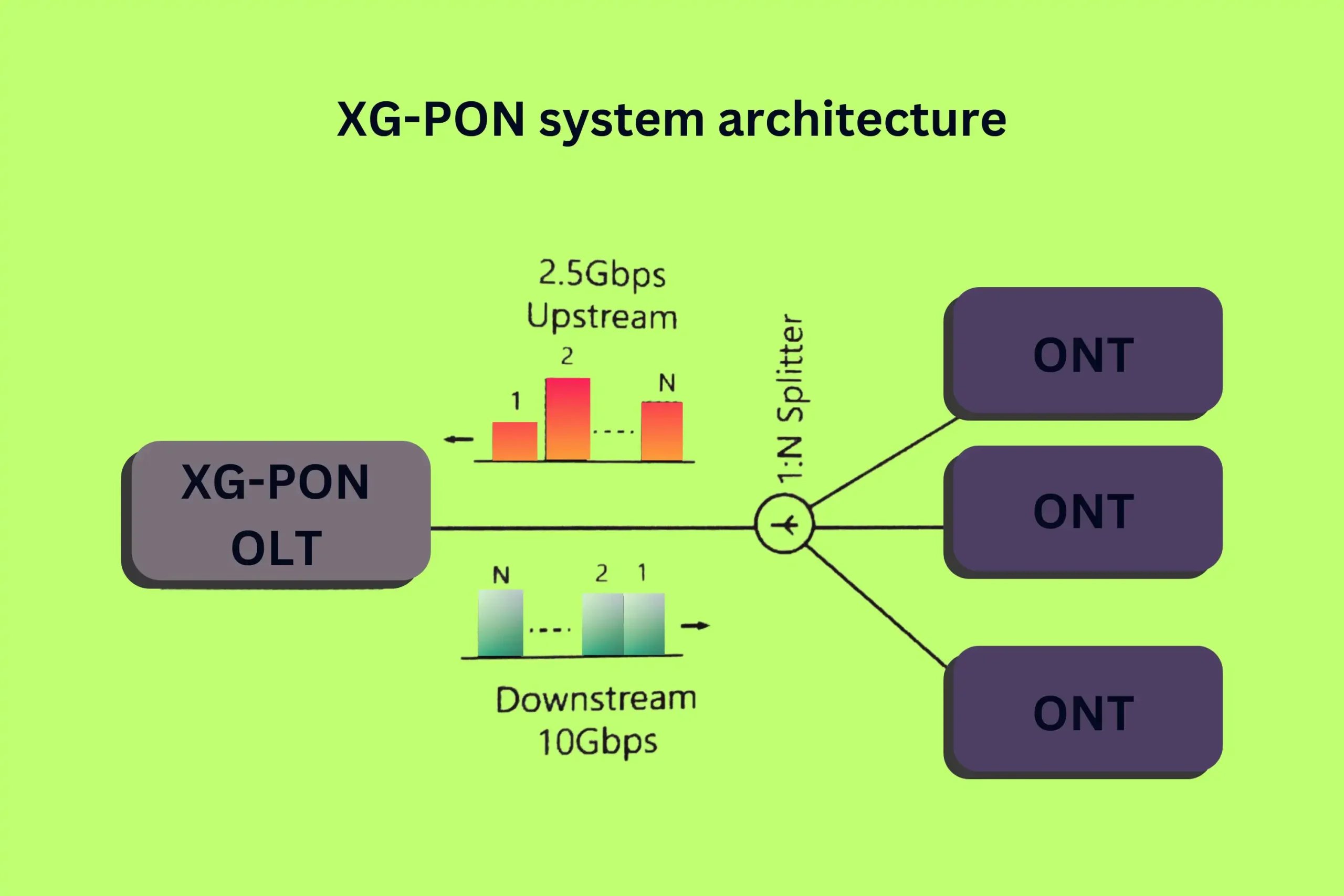

The 10 gigabit-capable passive optical network (XG-PON) standard was approved by the International Telecommunication Union in 2010, providing an upgrade from G-PON with enhanced speeds.

XG-PON increased downstream capacity to 10 Gbps and upstream to 2.5 Gbps compared to 2.5/1.2 Gbps in G-PON. It also added enhanced security and efficiency features. The standard was backward compatible, allowing co-existence with G-PON networks on the same fiber infrastructure.

Deployment accelerated after 2015, led by Asia. By 2021, over 40 million XG-PON lines were in service globally. XGS-PON later increased symmetric speeds to 10 Gbps both downstream and upstream. Global XG(S)-PON subscriptions are projected to surpass 150 million by 2025, driven by 5G backhaul and gigabit FTTH demand.

Key aspects of the XG-PON standard:

- Approved by ITU in 2010 as an upgrade from G-PON

- Boosts downstream speed to 10 Gbps (from 2.5 Gbps)

- Upstream speed increased to 2.5 Gbps (from 1.2 Gbps)

- Adds enhanced security and efficiency

- Backward compatible with G-PON on the same fiber

- Over 40 million lines in service globally by 2021

- Path to XGS-PON with symmetric 10 Gbps up/down

- Projected 150 million users by 2025, driven by 5G and gigabit fiber demand

2012 – Flexible Grid WDM

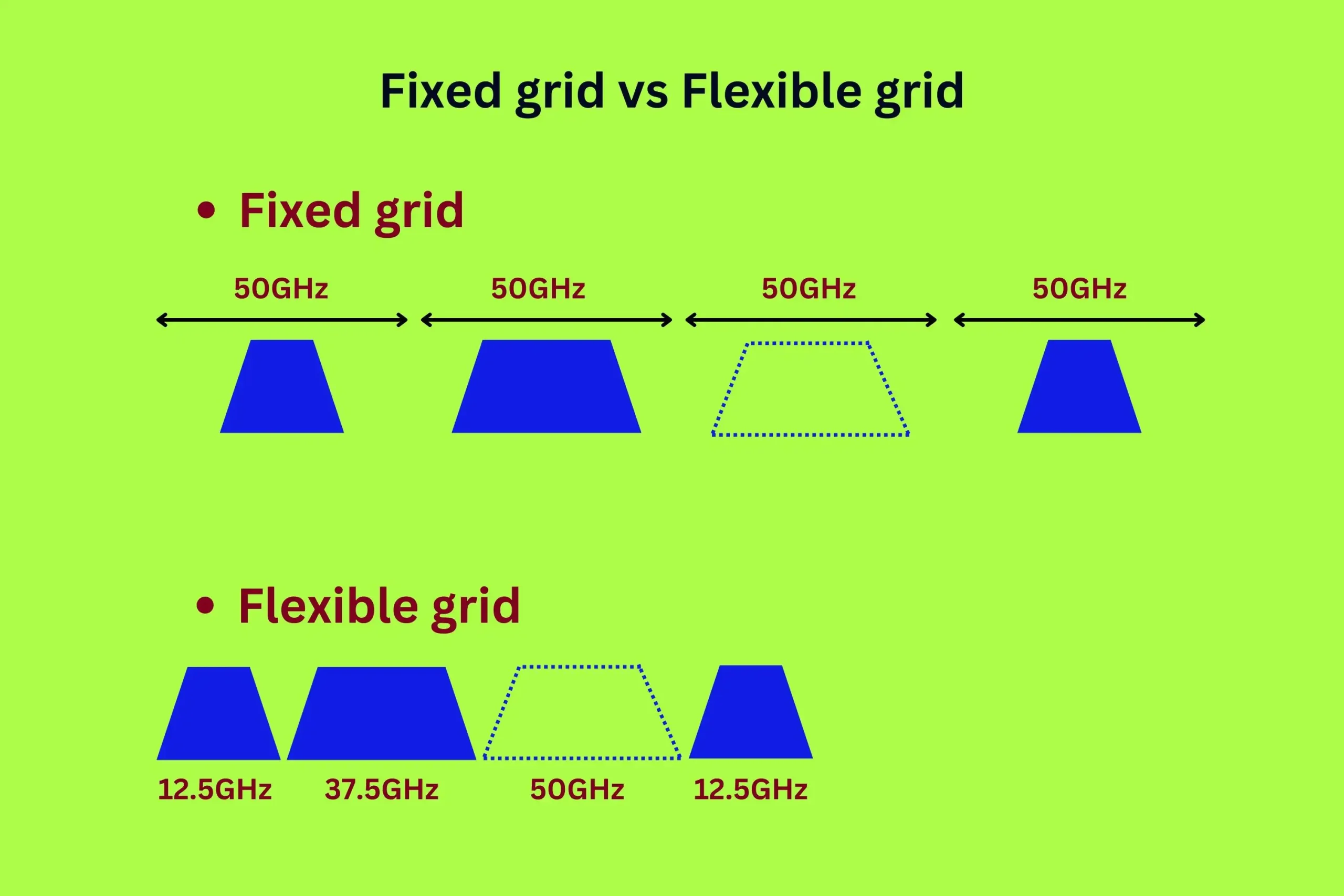

The flexible grid wavelength division multiplexing (WDM) standard was approved by the International Telecommunication Union (ITU) in 2012, increasing fiber capacity dramatically compared to traditional fixed grids.

Earlier WDM systems used a 50 GHz or 100 GHz fixed channel spacing plan from 1530-1565 nm. With the flexible grid, the spectrum is divided into narrower slots, like 6.25 GHz or 12.5 GHz. Channel width can be flexibly adapted from 12.5 to 87.5 GHz based on need. This allows optimizing and squeezing in more channels.

A flexible grid enables efficient use of fiber capacity in an era of heterogeneous mixtures of lower speed 10G signals and high throughput 40G/100G/400G channels.

Additional flexibilities like variable modulation formats further optimize spectral utilization. From an initial 2 Tbps capacity, a flexible grid now enables >25 Tbps per fiber.

Benefits of the flexible grid WDM standard:

- Approved by ITU in 2012 to enhance fiber capacity

- Replaces fixed 50/100 GHz grid with flexible 6.25 to 87.5 GHz slots

- Channel width can be dynamically allocated as needed

- Optimizes available spectrum for mixed low and high-speed traffic

- Efficiently accommodates legacy 10G with 40G/100G/400G

- Advanced modulation formats increase capacity further

- Increased typical fiber capacity from 2 Tbps to >25 Tbps

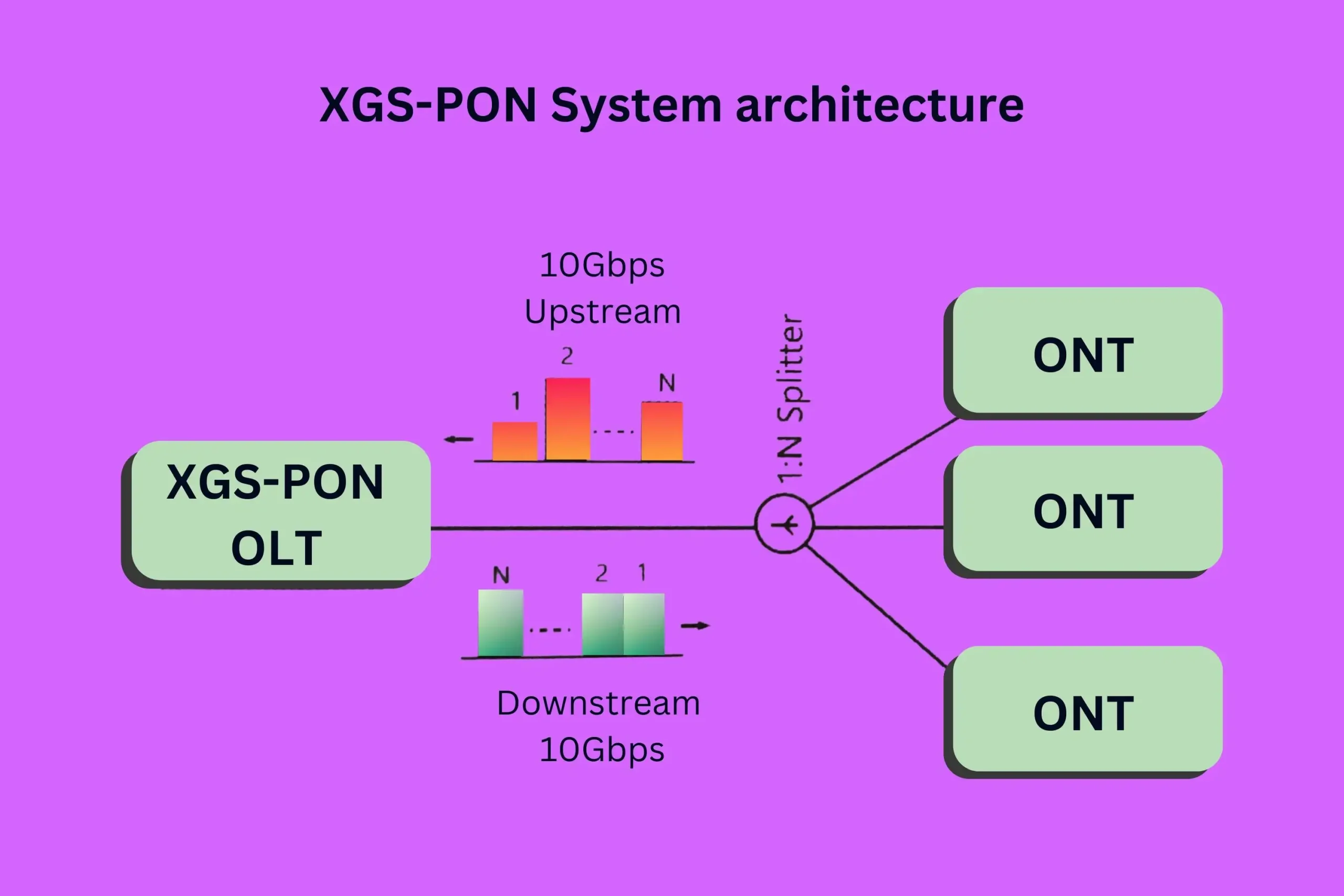

2016 – XGS-PON

The 10 gigabit symmetrical passive optical network (XGS-PON) standard was defined in ITU-T recommendation G.9807.1, approved in 2016. It boosted both downstream and upstream line rates to 10 Gbps in optical access networks.

XGS-PON provided a straightforward upgrade path from previous generations of PON technology, coexisting over the same fiber plant. It enabled carriers to economically deliver 10 Gbps broadband services to meet rapidly growing demands for symmetric gigabit access.

The first deployments commenced in 2020, led by South Korea and Japan. XGS-PON is projected to reach nearly 60 million lines globally by 2026.

Next-generation standards like 50G-PON will push capacities even higher. Fiber access networks continue to evolve, underscoring their long-term scalability to meet future needs.

Key aspects of the 10 Gbps symmetric XGS-PON standard:

- Approved by ITU in 2016 recommendation G.9807.1

- Symmetrical 10 Gbps upstream and downstream

- Smooth upgrade from previous PON standards

- Shares fiber infrastructure with G-PON and XG-PON

- Driven by demand for gigabit symmetrical broadband

- First deployments in 2020 in South Korea, Japan

- Nearly 60 million lines are projected globally by 2026

- Path to 50G-PON and beyond

2016 – Low-Loss Low-Nonlinearity Fiber

Ultra-low loss pure-silica core fiber was developed in 2016, overcoming capacity limits imposed by fiber attenuation and nonlinearity.

Researchers at Corning achieved 0.159 dB/km loss over 300 km in a pure-silica core fiber structure. This is closest to the theorized minimum loss for silica fiber. The large effective area (Aeff) of 130 μm2 also minimized nonlinear impairments.

Low-loss, low-nonlinearity fibers enabled longer unrepeated links, higher per-channel data rates, and denser WDM channel spacing without signal degradation. 5G optical backbone networks are leveraging these fibers for ultra-long haul high-capacity transmission.

Ongoing innovations in fiber materials, designs, and manufacturing aim to push losses below the 0.14 dB/km Shannon limit in the future. This will continue boosting the capacity and reach of fiber communication systems.

Significance of low-loss, low-nonlinearity fiber development:

- Record low loss of 0.159 dB/km over 300 km achieved in 2016

- The largest achieved effective area of 130 μm2

- Near theoretical minimum loss for silica glass fiber

- Reduces attenuation and nonlinearity limitations

- Enables unrepeated links >250 km at 100G and higher per-channel rates

- Permits denser WDM packing without degradation

- Optimized for high-capacity 5G optical backbone networks

- Ongoing advances targeting to surpass Shannon’s 0.14 dB/km limit

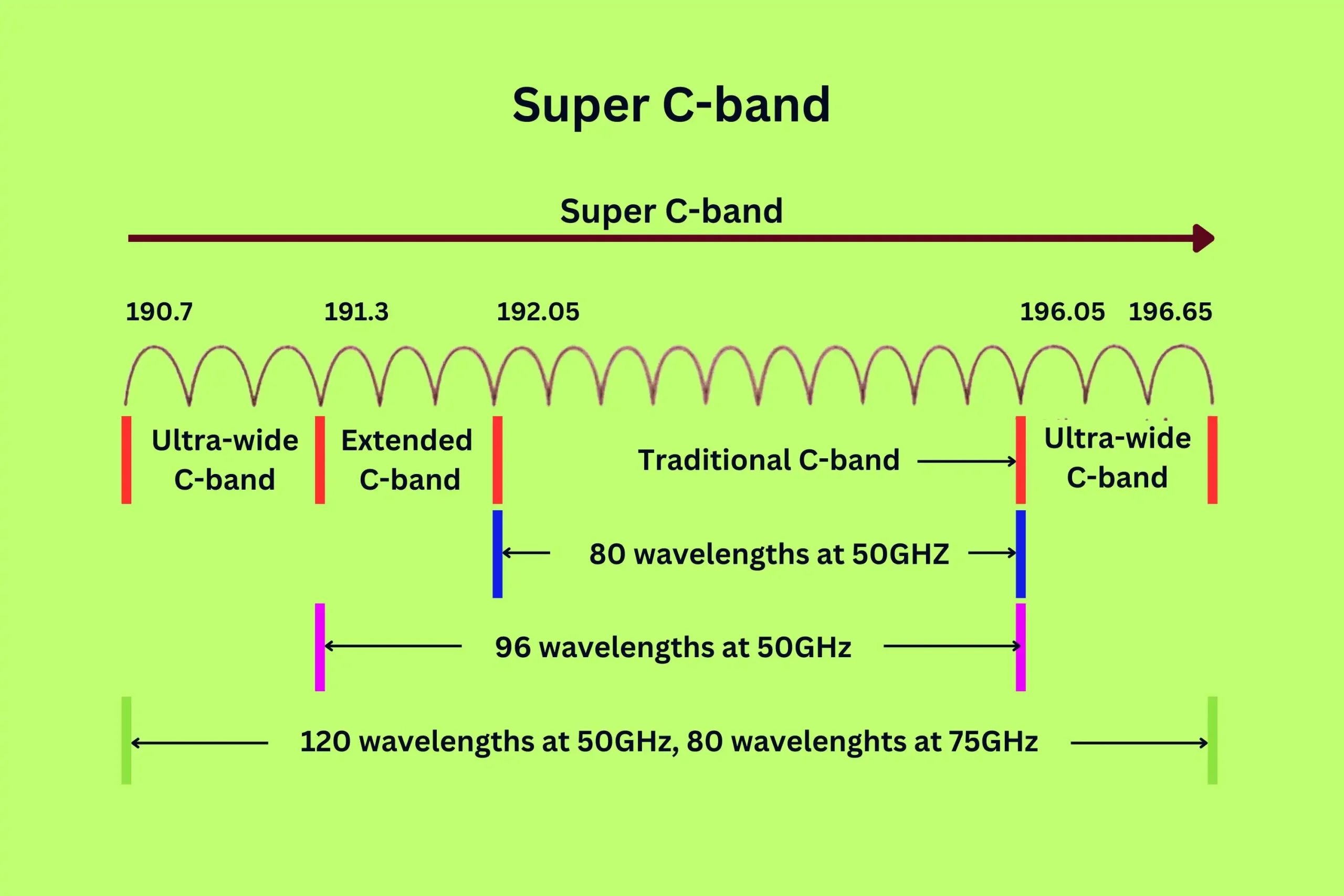

2019 – Super C-Band

Super C-band utilizing the extended 1527-1565 nm range was demonstrated in 2019, increasing capacity by 50% compared to traditional C-band.

Super C-band transmission utilizing the extended 1527-1565 nm band was demonstrated in 2019, boosting fiber capacity by 50% compared to traditional C-band.

Conventional dense WDM uses wavelengths from 1530-1565 nm in the erbium gain band. By expanding to a super C-band from 1527 nm to 1565 nm, more channels can be accommodated. Super C-band squeezes in up to 96 channels at 50 GHz spacing versus 64 channels in standard C-band.

High-performance amplifiers like EDFAs now cover the extended band. Digital signal processing mitigates impairments from Raman scattering and gain ripples. Field trials have validated a 50% capacity gain without requiring new cabling. Super C-band allows network operators to increase fiber capacity to meet surging data demands.

Key benefits of expanding to super C-band:

- Utilizes extended 1527-1565 nm band versus 1530-1565 nm standard

- Accommodates up to 96 channels at 50 GHz spacing

- 50% more capacity than 64 channels in traditional C-band

- Enabled by high-performance amplifiers over extended-spectrum

- DSP compensates for Raman scattering and gain variations

- Can increase capacity without new cabling

- Allows networks to scale capacity without adding fiber

2020 – 25G/50G EPON

25G and 50G Ethernet passive optical network (EPON) standards were approved by IEEE in 2020, providing a major capacity boost for fiber-to-the-home access networks.

Previous generations capped at 10 Gbps. 25G EPON quadruples upstream speed to 25 Gbps downstream, while 50G EPON offers bidirectional 50 Gbps. Support for tuned lasers also increases bandwidth efficiency.

The standards maintain backward compatibility with 10G EPON interfaces, easing migration. Field trials have validated extended reaches of 20 km and low latency. 25G/50G EPON allows carriers to deliver multi-gigabit broadband services to compete with cable and fiber.

Highlights of 25G/50G EPON standards:

- Standardized by IEEE in 2020

- Upgrade from 10G EPON

- 25G EPON provides 25 Gbps downstream, 50G EPON adds 50 Gbps upstream

- Tuned lasers enhance spectral efficiency

- Backward compatible with 10G EPON

- Field trials validated 20 km reach and low latency

- Enables multi-gigabit fiber broadband services

- Allows fiber to compete with cable and 5G fixed wireless access

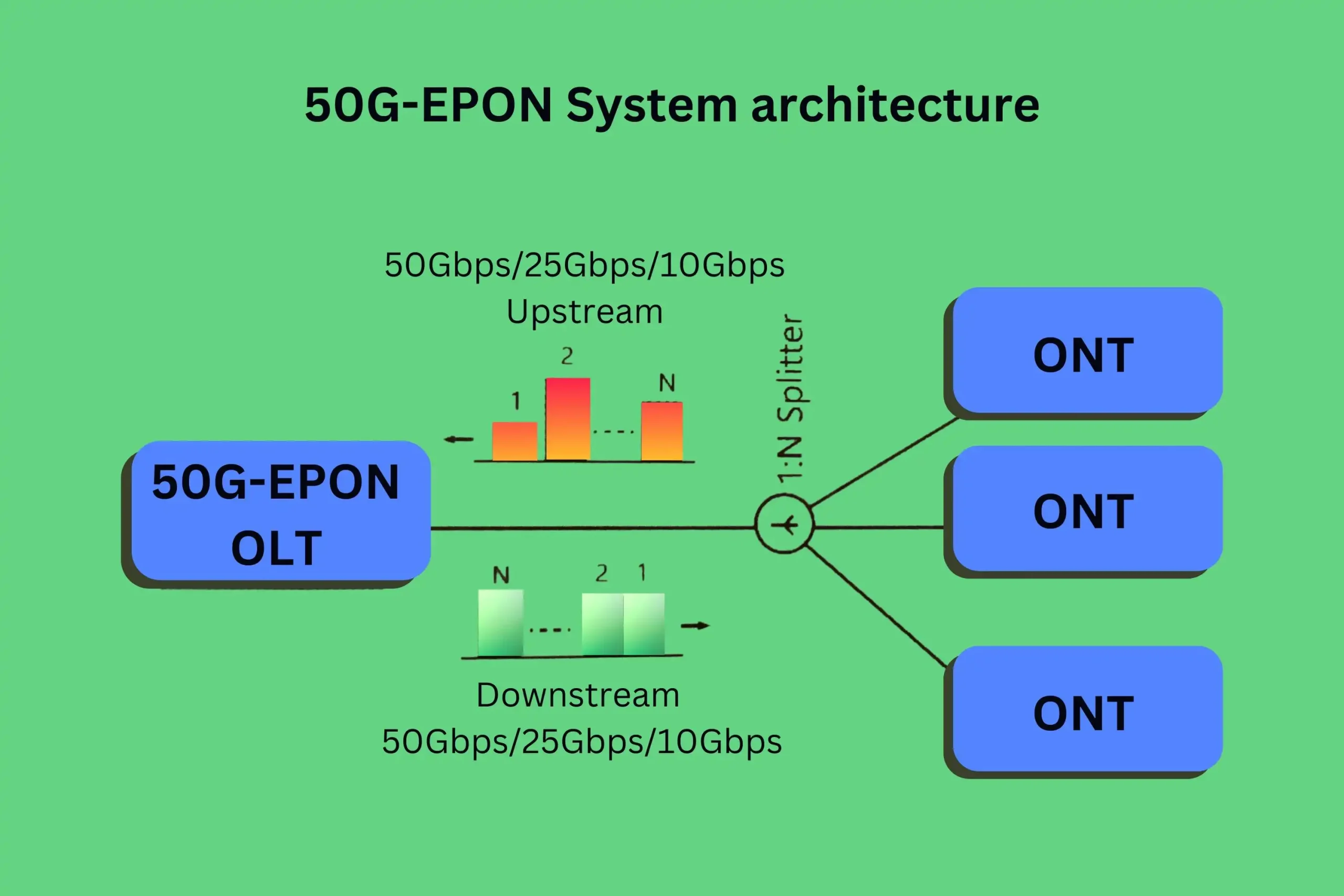

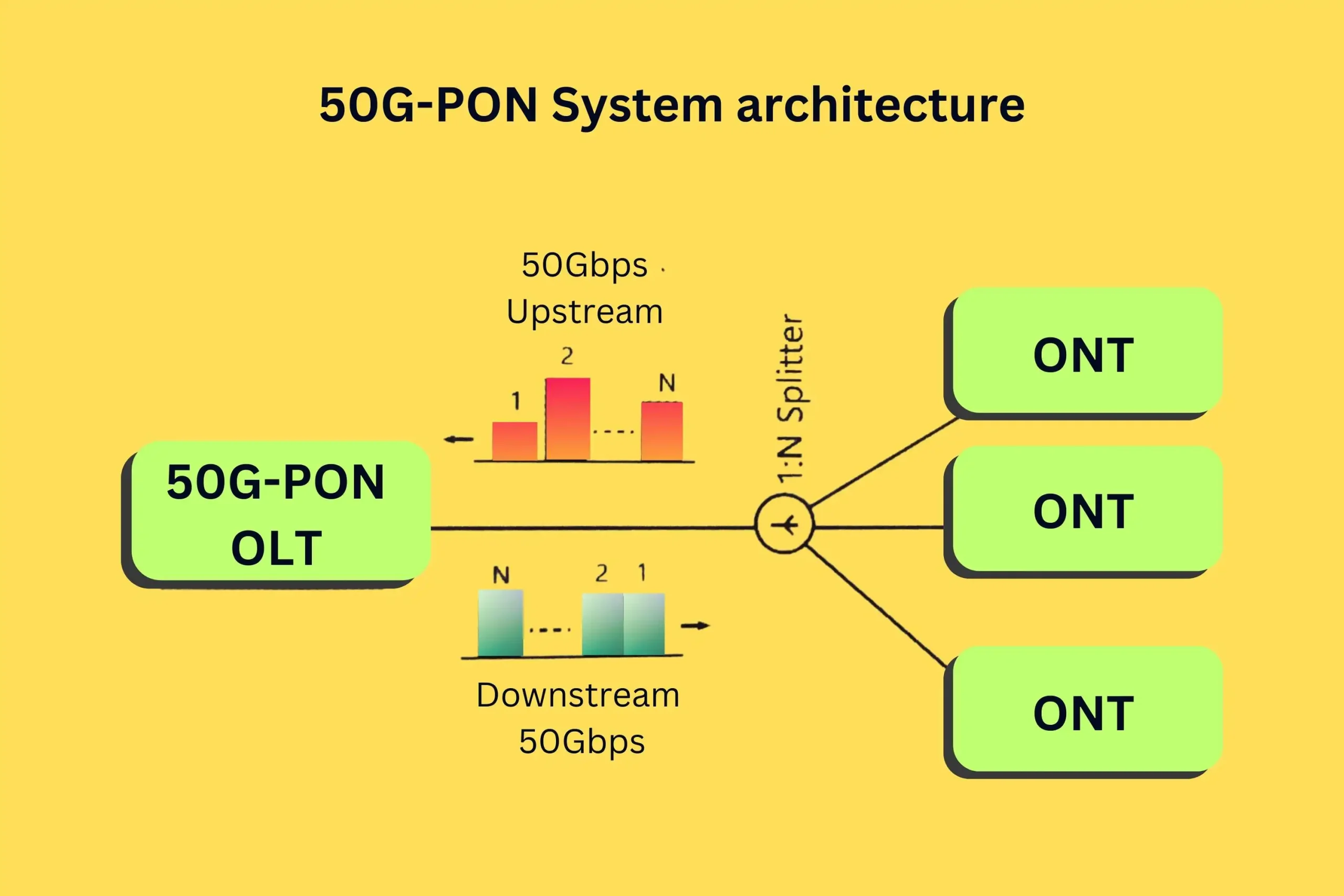

2021 – 50G-PON

The 50G-PON standard was approved by the International Telecommunication Union (ITU) in 2021, delivering symmetrical 50 Gbps capabilities for next-generation PON access networks.

50G-PON boosts speed fivefold compared to the 10G-PON systems widely deployed. It maintains compatibility with previous PON generations over the same fiber, easing upgrades.

New technical capabilities include multiple wavelength bands, sliced spectrum, and flexible rate adaptation. 50G-PON enables carriers to cost-effectively deliver 10Gbps and faster broadband services to meet explosive demand growth.

Early rollouts commenced in 2022, led by Asian operators. 50G-PON pave the path to later 100G and higher networks, cementing PON’s long-term scalability to match rising fiber-to-the-home speeds.

Key attributes of the 50G-PON standard:

- Approved by ITU in 2021 recommendation G.9804

- Delivers symmetrical 50 Gbps speeds

- 5X increase from widely deployed 10G-PON

- Maintains compatibility with previous PON generations

- New capabilities like multi-band, spectrum slicing, flexible rate adaptation

- Facilitates multi-gigabit fiber broadband services

- Early rollouts started in 2022, led by Asian operators

- Evolution path to 100G-PON and beyond

Summary

The history of fiber optics shows how far communication has progressed. Researchers worked hard for many years to solve problems. They tested new ideas and learned from failures. Each success made networks better and faster.

Now, fiber carries data all over the world. It helps us learn, work, and connect anytime from anywhere. But the story continues. Fiber will keep changing to handle more data in the future. Engineers now focus on pushing limits further. They aim to strengthen networks against coming demands.

New materials and techniques will enhance capacity and distance. Cooperation crosses international borders, too. Together, innovators worldwide will advance this critical technology even more. Fiber optics remains vital as a path towards smarter daily experiences for everyone on the planet.

FAQ

What is the history of fiber optics?

The history of fiber optics can be traced back to the early 1840s when Daniel Colladon and Jacques Babinet first demonstrated the guiding of light by refraction. However, it wasn’t until the 1960s that fiber optics became a practical technology with the development of lasers and low-loss optical fibers.

When did fiber start being used?

The first commercial fiber-optic cable was installed in 1975 by the Dorset police in the UK. Two years later, the first live telephone traffic through fiber optics occurred in Long Beach, California. In the late 1970s and early 1980s, telephone companies began to use fibers extensively to rebuild their communications infrastructure.

What is the history of fiber optic sensing?

Fiber optic sensing is a relatively new technology that has emerged in recent decades. It uses light properties to measure various physical parameters, such as strain, temperature, and pressure. Fiber optic sensors have many applications, including structural health monitoring, environmental monitoring, and medical diagnostics.

What company invented fiber optics?

The invention of fiber optics is often attributed to several individuals and companies. Daniel Colladon and Jacques Babinet are credited with demonstrating the principle of light guiding in the early 1840s. In the 1960s, researchers at Bell Laboratories developed the first working fiber-optic data transmission system. Corning Glass Works played a key role in developing low-loss optical fibers, which made fiber optics a practical technology.

Is fiber-optic cable obsolete?

No, fiber-optic cable is not obsolete. It is a very durable and reliable technology that is capable of transmitting large amounts of data over long distances. Fiber optics is the backbone of the modern telecommunications network, and it is also being used in a growing number of other applications, such as data centers, medical imaging, and industrial automation.